Familiar AI voices, Siri, Alexa and others power smart living. They help you with translation and even chat with you. But what differentiates humans from robots or artificial intelligence (AI) is the ability to communicate, generating complex cognitive interactions like the expression of emotions in speech which can hardly be comprehended by programmed algorithms. Now, AI technology is here to augment and facilitate communication between humans.

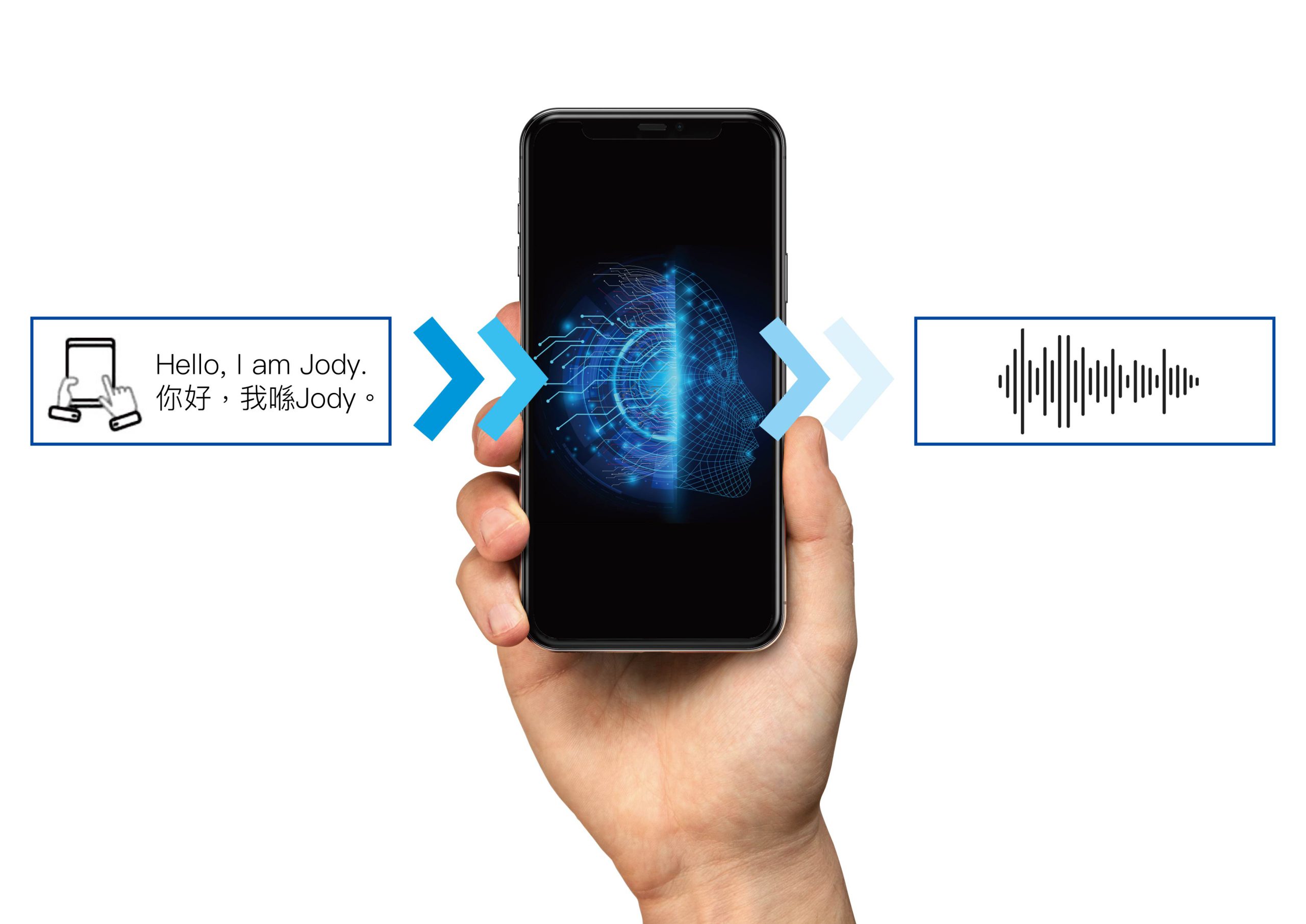

Professor Tan LEE, of the Department of Electronic Engineering, a specialist in deep learning, speech and audio signal processing in The Chinese University of Hong Kong (CUHK), has been developing spoken language technologies that enable AI to reproduce a person’s voice. In the Digital Signal Processing and Speech Technology Laboratory, he has developed, for the first time, a personalised Chinese text-to-speech system (TTS). What has drawn much of the community’s attention recently is his team’s success in helping a patient, Jody, with oral-laryngeal cancer to reproduce and “clone” her own voice with AI speech technology. The system synthesised the Jody’s voice characteristics and speaking styles and allowed her to “speak” just by typing on the mobile phone.

It started with data collection. The team made five recordings with the patient, covering Cantonese oral material like casual conversations, story-telling and bible readings to include as much of her linguistic and speech characteristics as possible. These included syntax, onsets, consonants, common collocations, and stress. These were processed by the laboratory, to form a body of her commonly used language, speech characteristics, phrasing, and collocations. The AI tries to learn as much as possible about the voice of the patients from this recorded data, or what is called training data, before their ability to speak is lost entirely. A speech synthesis model is then constructed, drawn from the patient’s speech at that time that carries a close resemblance to the original voice. This is then integrated into a mobile app, personal to that patient who can tap out what they want to say and be heard in their own voice.

The synthesis does not attempt to create an improved or more understandable version of the voice. “There is technology to do that but that is not the purpose,” says Professor Lee. “Our goal is to preserve the voice as it stands. It is to reflect the features that the patient wants to keep, faithfully, in their original form. That means a lot to a person.”

Up to now, the team has been in touch with medical colleagues in learning about other diseases like Parkinson’s and amyotrophic lateral sclerosis (ALS) where people might also benefit and to whom he can offer the option. He has experienced situations where the family has been keen on the app but the patient, undergoing enervating medical treatment, has refused it. “I don’t want to press this and cause psychological pressure just because the technology is there. As an engineer, I want to make the technology better for those who feel comfortable using it and improve their lives.”

In the spirit of AI, or “learning from data”, it is always a case of the more the data, the better the AI performance. However, Professor Lee is trying to look at the interest of the patient. If a speech model is wanted that covers every single variation of the voice or speaking style of a person, a lot of recordings are needed, and a lot of effort from the patient too. “But we can never do that. It would be endless work,” he adds. What is critical about human speech is that it contains a lot of redundancy. The research team is trying to exclude all the unnecessary repetitions and data, and minimise what they need to record. It is about a struggle in balancing quality and quantity. “Our current objective is to streamline the procedure and put less of a burden on the patient. We are looking to the patients being able to make a recording at home by themselves, without supervision, using a portable system or a tablet computer with a collar microphone,” he says.