Nearly 1 out of every 1000 babies worldwide are born with hearing loss or suffer it after birth and more than 90% of these are born to hearing parents. There is an urgency for hearing people who are living and dealing with this population of deaf people to learn sign language to communicate with them. Yet many hearing people trying to use sign language are not as aware as “native” signers of what they are signing, especially in coordinating hands and fingers, and using non-manual features like facial expressions. Finding technologies to bridge the gap between the deaf and hearing worlds has been a long running concern of Professor Felix SZE Yim Binh, Deputy Director of the Centre for Sign Linguistics and Deaf Studies (CSLDS) at The Chinese University of Hong Kong (CUHK). Now “SignTown”, is the first step in Project Shuwa, an initiative to tackle these difficulties run jointly by Professor Sze’s team, Google, The Nippon Foundation (Japan), and the Sign Language Research Center of Kwansei Gakuin University.

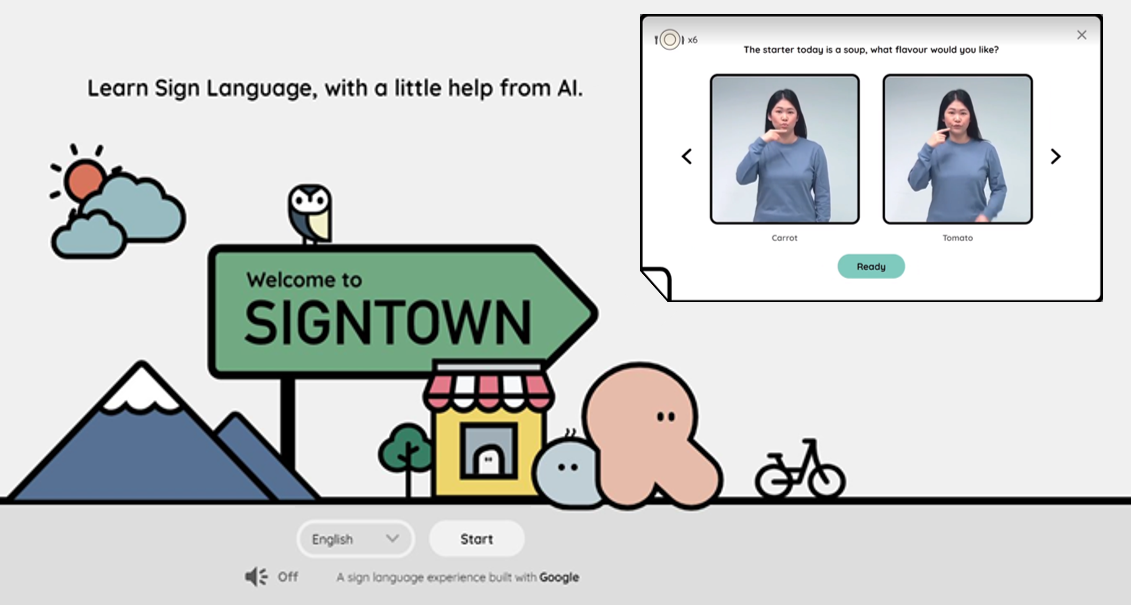

SignTown players are placed in a make-believe town where they have to make signs in front of their laptop camera to complete every task required in daily activities, such as packing for a trip, finding a hotel, or ordering food at a café. On screen, actors in two frames give the signs for items connected with an activity. The player chooses one and, on cue, imitates it to camera. The AI-powered recognition model gives immediate feedback on the player’s accuracy. Cute hand-shaped characters scattered through the game explain the concepts of “sign language”, “deaf people” and “deaf culture”. Both hearing and deaf people can learn the culture of signs and the deaf, in Hong Kong and Japanese sign languages.

CSLDS has been collaborating with The Nippon Foundation (Japan) in sign language development since 2003 and started the Asian SignBank, an online lexical data base, the functions of which have been expanding. In 2019, the Foundation approached CSLDS about working with Google to integrate an AI function. “AI is crucial for the Asian SignBank in moving on. As things stood, a correct sign could only be found by typing a word into the dictionary box, or the meaning of a sign found by identifying a handshape in the image album, neither of which are very exact,” said Professor Sze. For a decade, different researches have tried to discover better recognition technology and throughout Professor Sze had been dreaming of a way whereby, for example, a sign language video clip could be uploaded to a site and the technology could recognise the sign and its language origin. She travelled to many conferences where attempts at this had been reported but not succeeded, so she was very keen to take up the offer from Google. Its objective was to create a learning method which was directly available to the community and they suggested an online game.

Stemming from this idea, Project Shuwa built the world’s first successful machine-learning based model that can recognise three-dimensional sign language movements, and track and analyse hand and body movements as well as facial expressions, using a standard camera. This novel technology is the foundation of SignTown. Professor Sze says, “Exclusion of any of these parameters could result in ungrammatical or uninterpretable messages. While sign languages of different regions vary, the phonetic features are universal and the number of combinations is finite, therefore recognition models are possible. Yet, past models focused mainly on hands and gestures. Strict equipment requirements including 3D cameras and digital gloves for capturing three-dimensional sign language movements left the technology unpopular.” SignTown marks a technological breakthrough at the bottleneck in current recognition models.

What is lacking in traditional sign language recognition tools is compensated for

Professor Sze emphasises that the role of CSLDS in the collaboration is to provide expertise and linguistic knowledge in developing the sign language recognition model and organise the collection of Hong Kong sign language details to make data inputs. “A sign needs breaking down into different components and there are non-manual features like facial expressions and head positions. If the purpose is to help identify a sign from the video input, we as sign linguists believe that it is very necessary that the technology can tweeze apart all these components. We advised our collaborator that to be helpful, the technology must tell whether a signer is making a sign correctly.”

Professor Sze was a graduate in English and a linguistics postgraduate but turned to pursue sign language research all because of her fascination with sign language and deaf culture. To her, the prospects for Sign Town and signing generally in Hong Kong seem bright. She reports a soaring interest in the sign language course that CUHK runs for undergraduates. “We offer classes in level 1 to 6 and in this semester, we are able to open 11 new classes for level 1.”

“We don’t expect most hearing people to become expert users of sign language but we do hope that many will be willing to learn and find it fun. It is just one big step forward in trying to eliminate discrimination between hearing people and deaf people.”

SignTown is not fully developed yet but there are plans to expand the recognition model. For example, some grammar points are expressed by the torso or the head being moved forward a particular distance and that is difficult to detect, as yet, from just a front view. “There is still a long way to go,” says Professor Sze, “but SignTown has opened the door to a lot more opportunities for people to learn and know more about sign language in real life situations. There are plans to incorporate more signs from different countries in SignTown so that people around the world may learn more sign languages and know their differences.”

The next move in Project Shuwa is to generate an online sign dictionary that not only incorporates a search function, but also provides a virtual platform to facilitate sign language learning and documentation based on AI technology. The ultimate goal of the team is to develop an automatic translation model like Google Translate that can recognise natural conversations in sign language and convert them into spoken language using the cameras on computers and smartphones.

To learn sign language with SignTown: https://sign.town/

More about Project Shuwa: https://projectshuwa.org/