Surgeons who need to eyeball the operation site and hold the scalpel for a long time during conventional surgery are prone to eye fatigue and shaking hands. Surgical robots enable surgeons to complete a variety of minimally invasive surgeries in the areas of obstetrics and gynecology, urology, and otorhinolaryngology. It performs well in hard-to-access parts of the body, resulting in smaller incisions and less blood loss during the operation. With the wide acceptance of robotic surgery, many hospitals around the globe have introduced surgical robots to optimise surgical efficiency and safety.

Robotic surgery is performed by robot arms which mainly imitate the movements of the surgeon made on the control console. Professor Qi DOU, an expert in artificial intelligence from The Chinese University of Hong Kong (CUHK) believes that AI-assistance and gesture recognition to high precision supports the surgeon’s operating skills and helps the success of surgery.

Enhancing the gesture recognition of robots for a better understanding of complex procedures

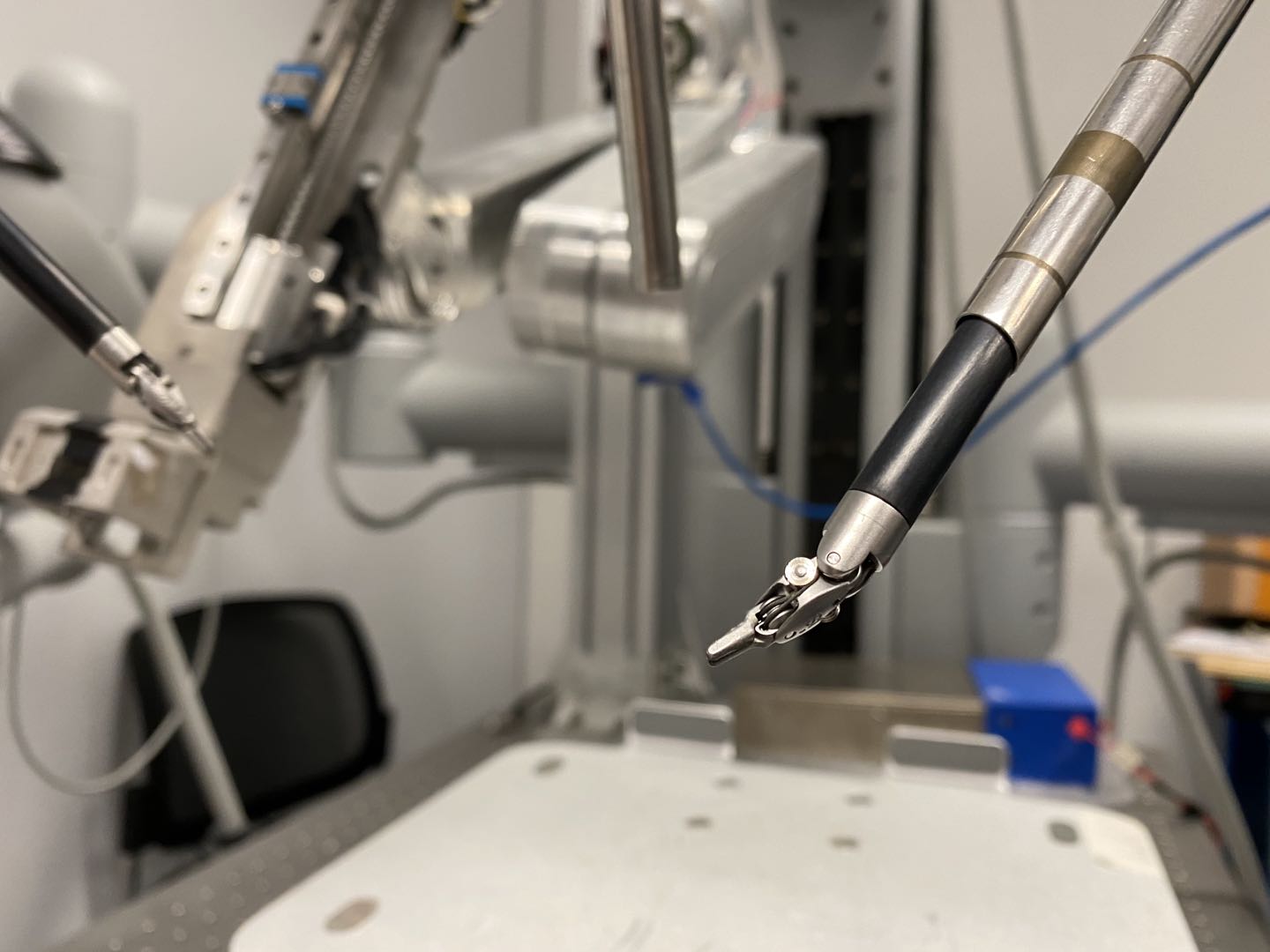

Recent methods for training gesture recognition models either solely adopt kinematics data or employ deep convolutional neural networks in improving visual image processing. However, Professor Dou considers these solutions only adopted a single source of information, which fail to meet the needs and delivery of complicated operations in which surgical robots are required to switch their forms frequently to operate multiple procedures. She further explained, “Minimally invasive surgery relies on the hand-eye coordination of the surgeons, and it is the same for surgical robots. The endoscope camera attached to the robotic arm serves as the ‘eye’ providing views of the surgical site and guiding its hands to collaboratively conduct actions in the right places, while hands drive changes in the visual scenes. By integrating the complementary information inherent in visual and kinematic multi-modal data recorded from the surgical robots, such hand-eye coordination can be used to better understand the complex procedures.”

The study of robotic surgery intelligence involves both AI and medical knowledge and it remains challenging to form a large-scale interdisciplinary team in Hong Kong or overseas. Professor Dou studied biomedical engineering during her university time and specialised in deep learning, AI technology and its applications in medicine during her PhD and postdoctoral. Since joining the Department of Computer Science and Engineering at CUHK in 2020, Professor Dou has led the team to focus on the advancement of surgical robots with machine intelligence, and recently developed an AI system with a novel deep learning method to intensively enhance the gesture recognition of medical robots. Her team collaborates closely with engineering faculties in robotics and medicine faculties in surgery for such interdisciplinary research at CUHK.

Accuracy of new system outperforms the current methods

The surgical robot adopting the new AI system has an endoscope camera for instantly forming a 3D view of the surgical site, and an operation console to provide manipulation for surgeons. AI assistance can also potentially deliver an alert when the surgical tools tend to reach the dangerous territory of veins and nerves. The breakthrough discovery is the application of “Graph Neural Networks”, a deep learning method used for image processing and video recognition, with about a hundred recorded surgeons’ operations to provide robots a better understanding of video content and wrist movements of surgeons.

It is the first-ever attempt to explore advanced AI techniques for correlating vision and kinematics to achieve better surgical gesture recognition based on hand-eye coordination in robots. The new system demonstrated new state-of-the-art performance with the public dataset “JHU-ISI Gesture and Skill Assessment Working Set” (JIGSAW). Its accuracy in both tasks of suturing and knot tying is as high as 88%.

The new system may be widely applicable to different platforms

There is a wide range of surgical robotic systems and related technologies that has emerged on the market. One kind of robot may not be applicable to all systems in hospitals. To prove the generalisability of the new system, Professor Dou’s team invited the Laboratory for Computational Sensing and Robotics at Johns Hopkins University (JHU) to validate the new system on two sets of medical robotic platforms of da Vinci Research Kit (dVRK) systems established at CUHK and JHU, through the international collaboration in the Multi-scale Medical Robotics Centre, CUHK. The robotic arms had to perform a peg transfer task which is one of the most popular tasks presented in surgical skill training.

The result was promising, with a stable and novel performance on both platforms. “This validation is very important. It demonstrates a satisfactory generalisability and independence of the system, which is crucial for data-driven AI techniques to be widely incorporated into different medical robots,” said Professor Dou.

Opening a new door to research on medical robots

Like humans, the surgical robots have strengths and weaknesses. There is variance in the precision of different actions. Professor Dou hopes that artificial intelligence that can provide cognitive assistance during surgical procedures helps to achieve greater precision in robots on various actions, and the system can be tested on platforms in different hospitals.

The research team obtained the Best Paper Award in Medical Robotics at the IEEE International Conference on Robotics and Automation 2021 (ICRA 2021). The method also won a championship in the “MIcro-Surgical Anastomose Workflow recognition on training sessions” (MISAW) sub-challenge in the international conference on Medical Image Computing and Computer Assisted Intervention 2020 (MICCAI 2020).

To commercialise the new system of AI for surgical robots like the da Vinci Surgical System, Professor Dou said, “The surgical robot is still relatively new to the profession, which takes time to adapt. Robotic surgery with intelligence will be important for surgeons, especially the young generation, but there is still a long way to transferring this technology. Instead, it gives hope by opening a new door to significant improvement in intelligent perception by medical robots, and to benefitting more surgeons and patients. It is also regarded as a good way to raise public attention to the advancement of medical robots and as a booster to promote the education of artificial intelligence in medical field.”