Swarming is a commonly seen phenomenon in the animal kingdom; animals band together for food and to dodge predators, as if they had someone controlling them. This has prompted Professor Li ZHANG, Department of Mechanical and Automation Engineering, The Chinese University of Hong Kong (CUHK), to incorporate this mechanism into the medical microrobots he has developed, in collaboration with Professor Qi DOU’s team from the Department of Computer Science and Engineering. The team came up with an AI-stimulated navigation system that enables millions of microrobots to navigate autonomously and adaptively, and reconfigure their motions, like honeybees do, but inside human bodies. This further reinforces their ability to perform tasks like delivering targeted treatments to hard-to-reach lesions inside the body.

Current microrobots, as the name suggests, have the advantage of being small in scale; but are usually operated as single units, meaning that if one has to deliver enough drugs to a targeted lesion, to operate in hundreds of thousand units concurrently shall be only way. Nevertheless, a complicated, pulsatile environment full of tortuous branches and fluids poses another huge challenge to manipulating millions of microrobots inside the human body. Even if external magnet and imaging technologies are used manually for navigation and real-time positioning, task failures can still be expected.

AI: the commander

In order to increase the swarm intelligence of the microrobots so that they can steadily accomplish tasks, the team integrated its long-year experiences in investigating microrobot navigation into deep learning algorithms, and has developed an AI system that realises the throngs’ abilities in automation and decision making in vivo. Professor Zhang says, “The robotic training given to the AI system is a consolidation of past experiences and knowledge in robot manipulation. It grants the swarm the ability to navigate autonomously, avoiding the chance of failure.”

The deep neural network (DNN) is the AI system/deep learning tool devised by the team to accomplish real time pattern distribution planning for the microrobot swarm and carry out medical tasks. It regulates swarm parameters from time to time, such as trajectory points, magnetic field, swarm center and target, according to the in vivo environment. The team has also proposed a five-level autonomy framework for the environment-adaptive swarm, with reference to the dynamics of the environment. Autonomy increases with each level, requiring less manual control. Operators can choose the autonomy level they need based on the task and working scenario.

Level 0 demonstrates the mode of manipulation commonly found in current microrobots using manual operation. Operators have to be veterans and have to be well-trained in the mechanism of the microrobots, as they have to control the actuation field in order to correctly regulate the swarm parameters. External imaging hardware like optical microscopes is also needed for real-time observation, to avoid obstacle collisions. Manual operation is prone to errors, and poor control can cause deviation or even splitting of the swarm.

The microrobot swarm operating at Level 4 is under full autonomous navigation by the AI system. It endows the swarm with the ability to explore an unknown, ever-changing, dynamic environment. If it is given the task of delivering targeted therapies to a specific lesion, the system will autonomously analyse the real-time environment to plan its route to the target and regulate the swarm distribution until the task is accomplished. If the swarm encounters a dead end or obstacle, it can still intelligently adjust its navigation and re-plan the route, without a single moment of human intervention or any extra cost to train operators.

The effectiveness and reliability of the microrobotic, AI-powered navigation system were examined in the blood vessels of human placenta tissue ex vivo, with support from the Prince of Wales Hospital, showing the system’s high effectiveness for autonomous navigation in such a highly complex lumen environment.

Professor Zhang predicts, “As the automated navigation system matures, we hope that one day it will enable surgeons to deploy a microrobot swarm for non-invasive or minimally invasive therapeutic application in the human body without specialised training, in particular in those tiny and tortuous lumens deep inside the body.”

Highly adaptive pattern formations

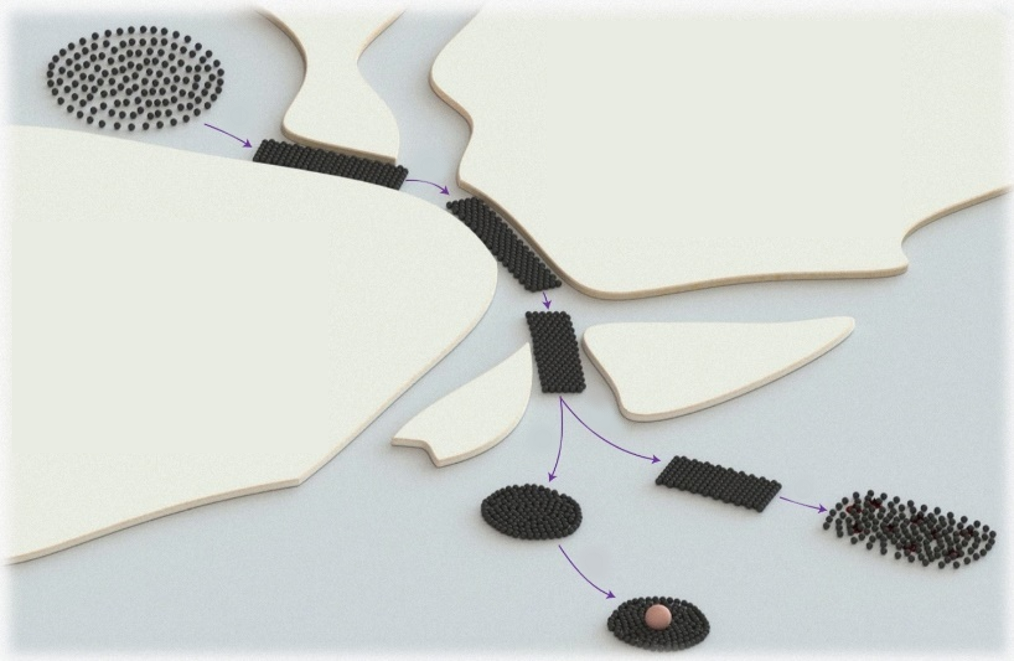

The microrobot swarm is made of magnetic nanoparticles with a diameter of about 400 nm, 1/200th thickness of a hair filament. By applying a programmed magnetic field, the system can trigger three swarm pattern configurations, each designed for different functions: spreading swarm (SS), ribbon-like swarm (RS) and vortex-like swarm (VS). The team hopes that the AI system can be applied to endovascular drug delivery or targeted therapy; for example, treating ischemic stroke in the brain, or treatment of cancerous lesions deep inside the human body.

The findings have been published in internationally renowned journal Nature Machine Intelligence. Please visit https://www.nature.com/articles/s42256-022-00482-8 for the full paper.